Background

Artificial intelligence

The hype surrounding artificial intelligence (AI) has of course not passed us by. Initially, the central question was: is this hype justified or exaggerated? To find out, we started to develop various proof-of-concepts (PoC). Our aim was to identify the strengths of the new AI models and estimate the effort required to successfully implement certain use cases.

Proof of Concepts in AI x neosfer

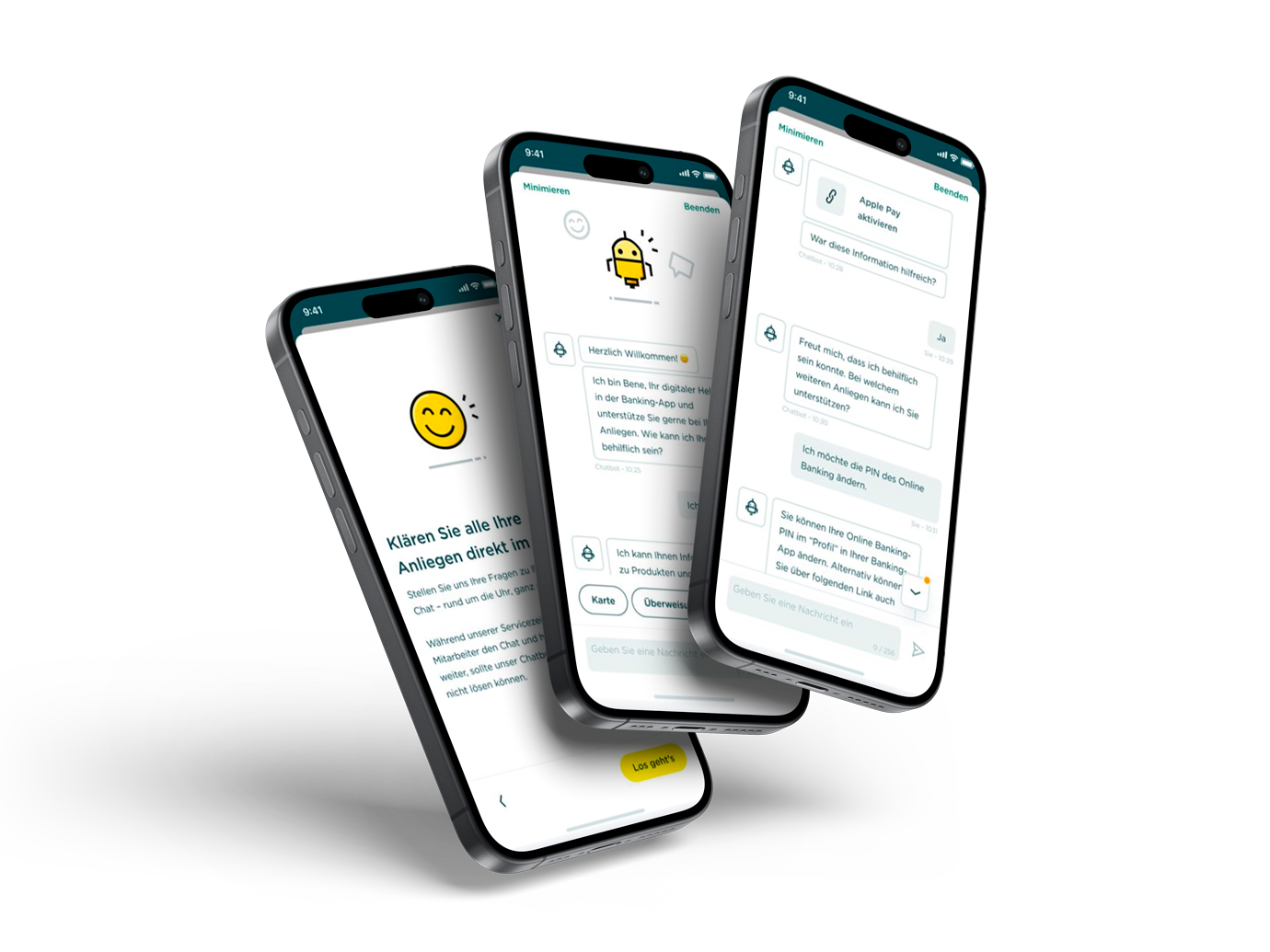

Proof-of-Concept 1: Commerzbank Service Chatbot powered by AI

In our first proof of concept (PoC) on the subject of AI, we investigated how disruptive this new technology actually is. To do this, we made a comparison: At the time of this PoC, Commerz Direktservice’s chatbot Bene had just been released. Bene was developed in the “traditional way” as a chatbot, with intensive manual training and the input of ready-made questions and answers. This development took months and ultimately led to a good result. Using the newly released model from OpenAI (ChatGPT 3.5), we then tried to “rebuild” Bene and assess the quality. The surprising result: manual training of the model was no longer necessary. Using a specially programmed application, we were able to simply enter all publicly available pages of Commerz Direktservice into the model and immediately start asking questions. The result convinced us: LLMs are undoubtedly the way forward for service bots.

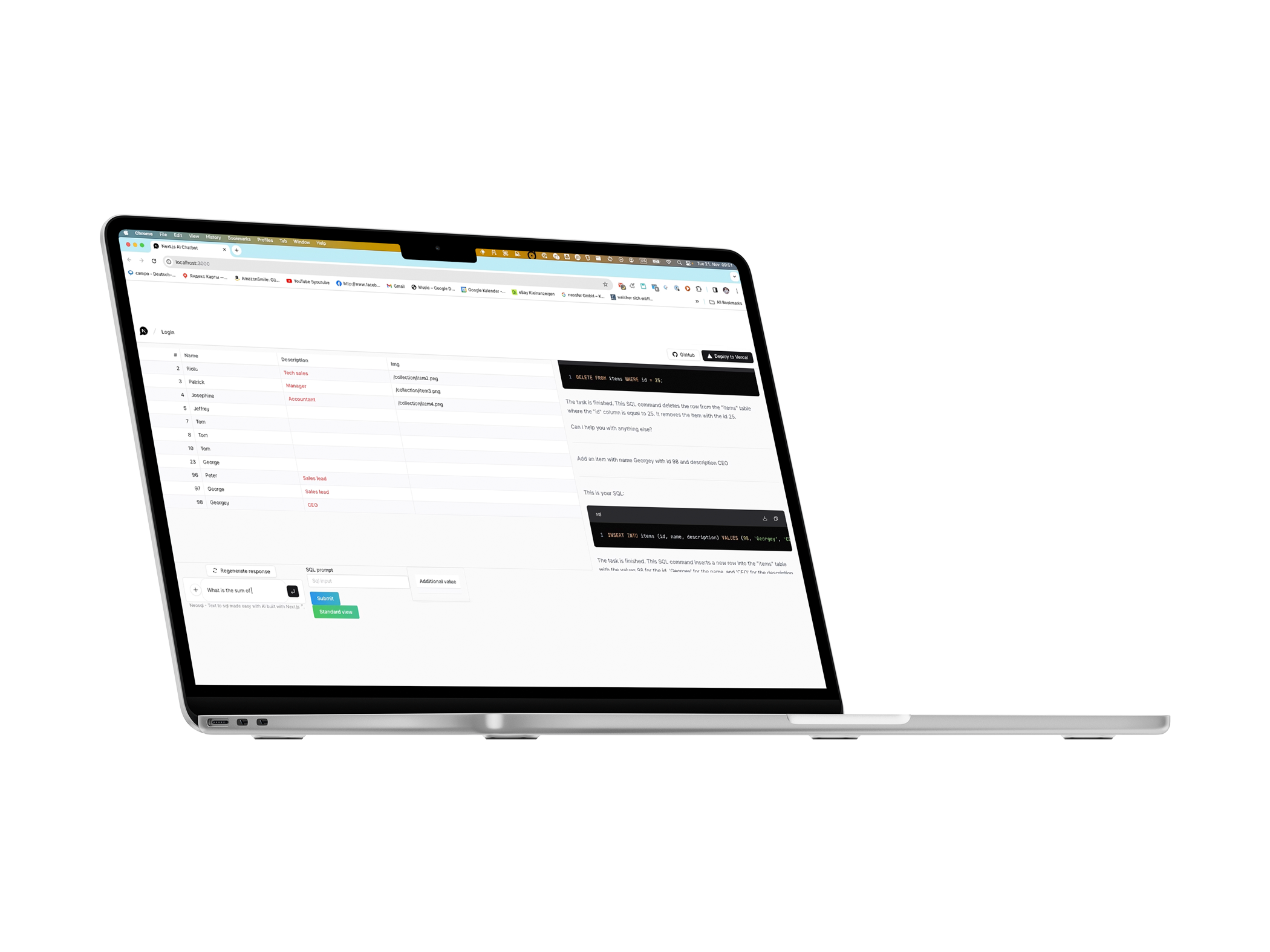

Proof-of-Concept 2: Text to SQL (Co-Pilot)

In discussions with Commerzbank, we noticed that they were looking for ways to improve technology and development processes with code generation and code optimization tools. That’s why we built a PoC using LLM that can translate human language into SQL statements. This allows people without programming skills to interact directly with a database.

In less than two weeks, a co-pilot module for generating SQL from natural language was implemented. This tool could be used by any employee and thus increase the efficiency of IT throughout the bank.

Proof-of-Concept 3: Confluence Search

In many IT projects, the associated documentation grows steadily over time and becomes increasingly complex. As a result, the associated databases and workspaces become increasingly confusing and difficult to keep track of – both for existing employees and (especially) for new joiners. Understanding this data jungle can take a lot of time.

We wanted to remedy this by building an LLM tool that searches existing Confluence pages and provides targeted answers to all members of the organization, especially developers. The PoC has proven its functionality and further expansion is now being considered.

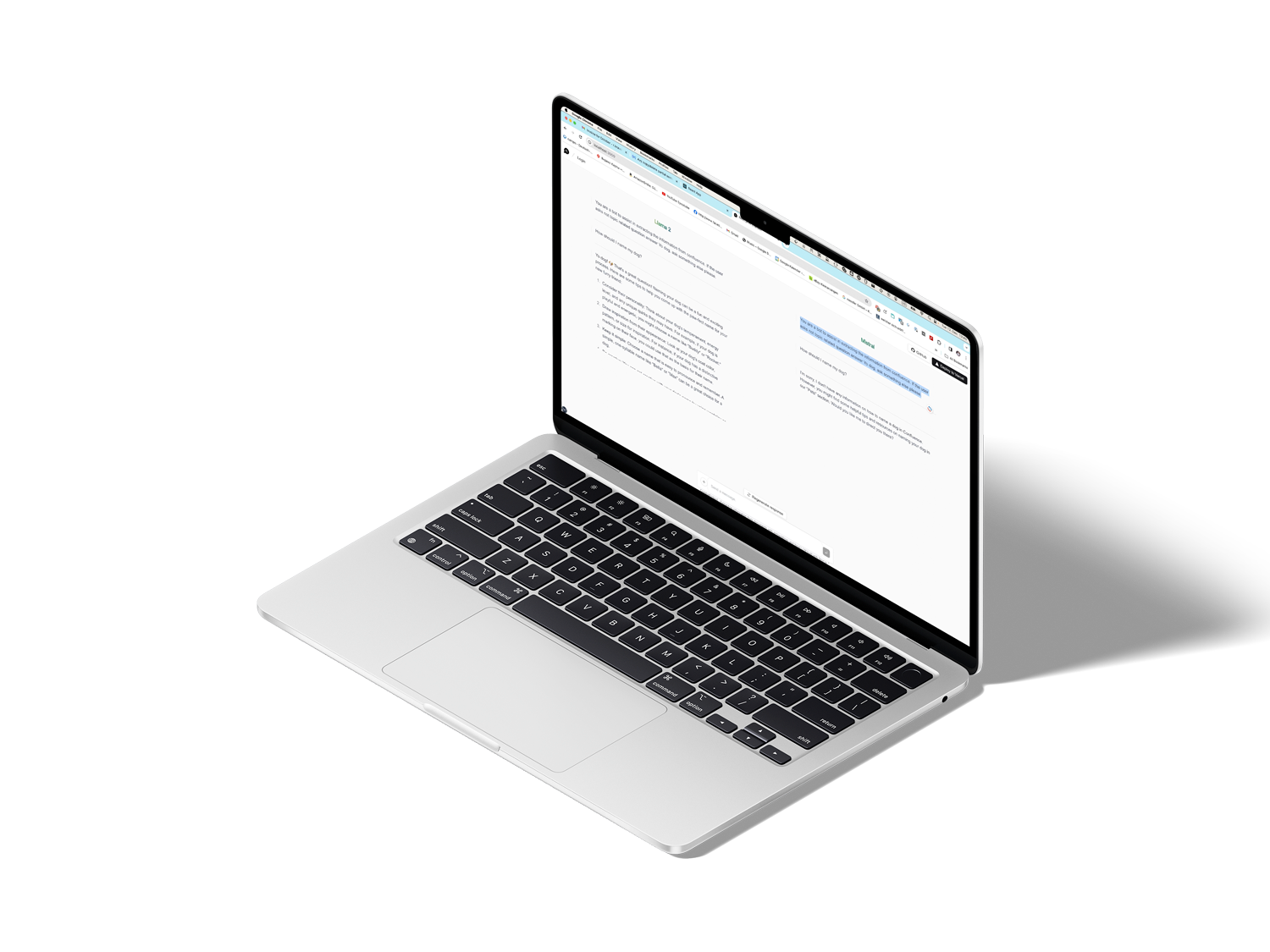

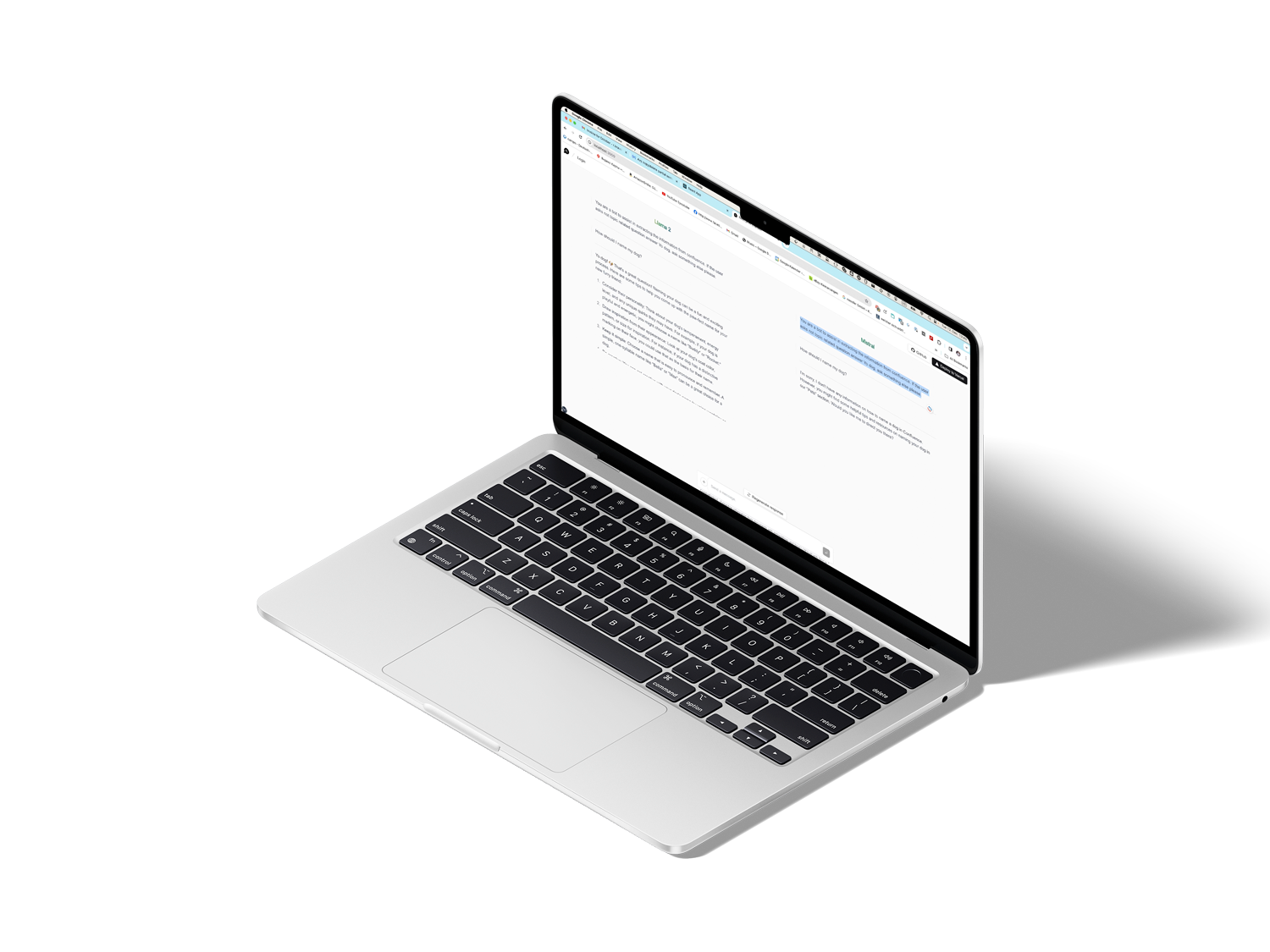

Proof-of-Concept 4: Non Disclosure Agreements (NDA)

With this use case, we tested the extent to which it is possible to use GenAI (LLM) to check NDA (non-disclosure agreements) documents for Commerzbank’s requirements and suggest possible adjustments. To this end, we built an application that was trained using expired and anonymized NDAs. The aim of the PoC was to enable users to provide NDAs as input, whereupon the application processes this input independently and issues corrections. The final PoC was able to prove its functionality, which is why the project was a success for us. However, it should be noted that fine-tuning the model would have required additional resources in the form of time and computing capacity, which would have exceeded the scope of a PoC.

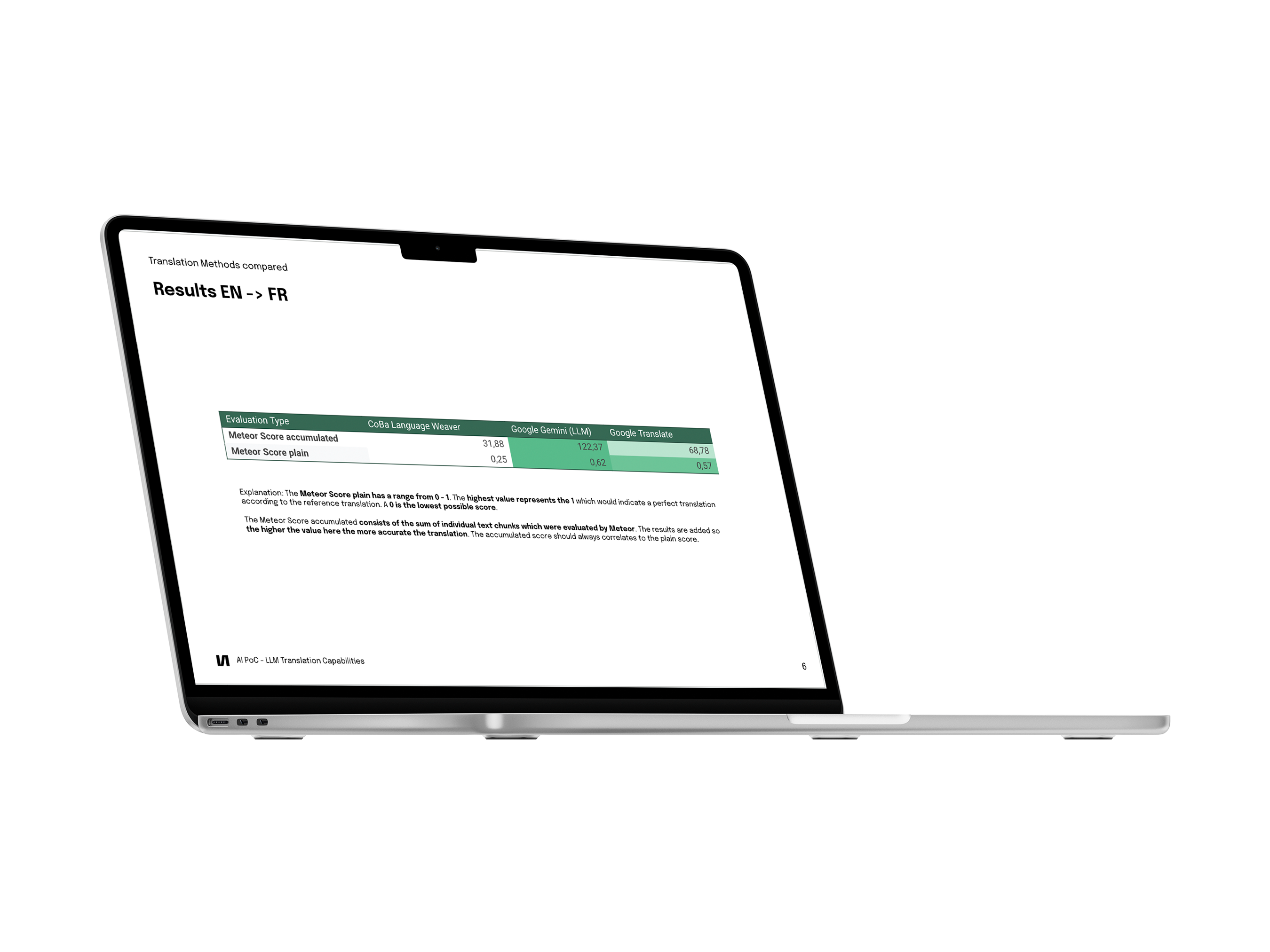

Proof-of-Concept 5: Translation Tool

In this PoC, we worked with Commerzbank to investigate whether LLMs are superior to traditional translation tools. To do this, we fed various LLMs (both open source and closed source) with the same source documents and asked them to translate them. We then compared the resulting translations with a professional reference translation and evaluated them using the METEOR framework (Automatic Machine Translation Evaluation System). Initial tests show that current LLM models may indeed be superior to traditional translation systems in terms of both quality and cost.

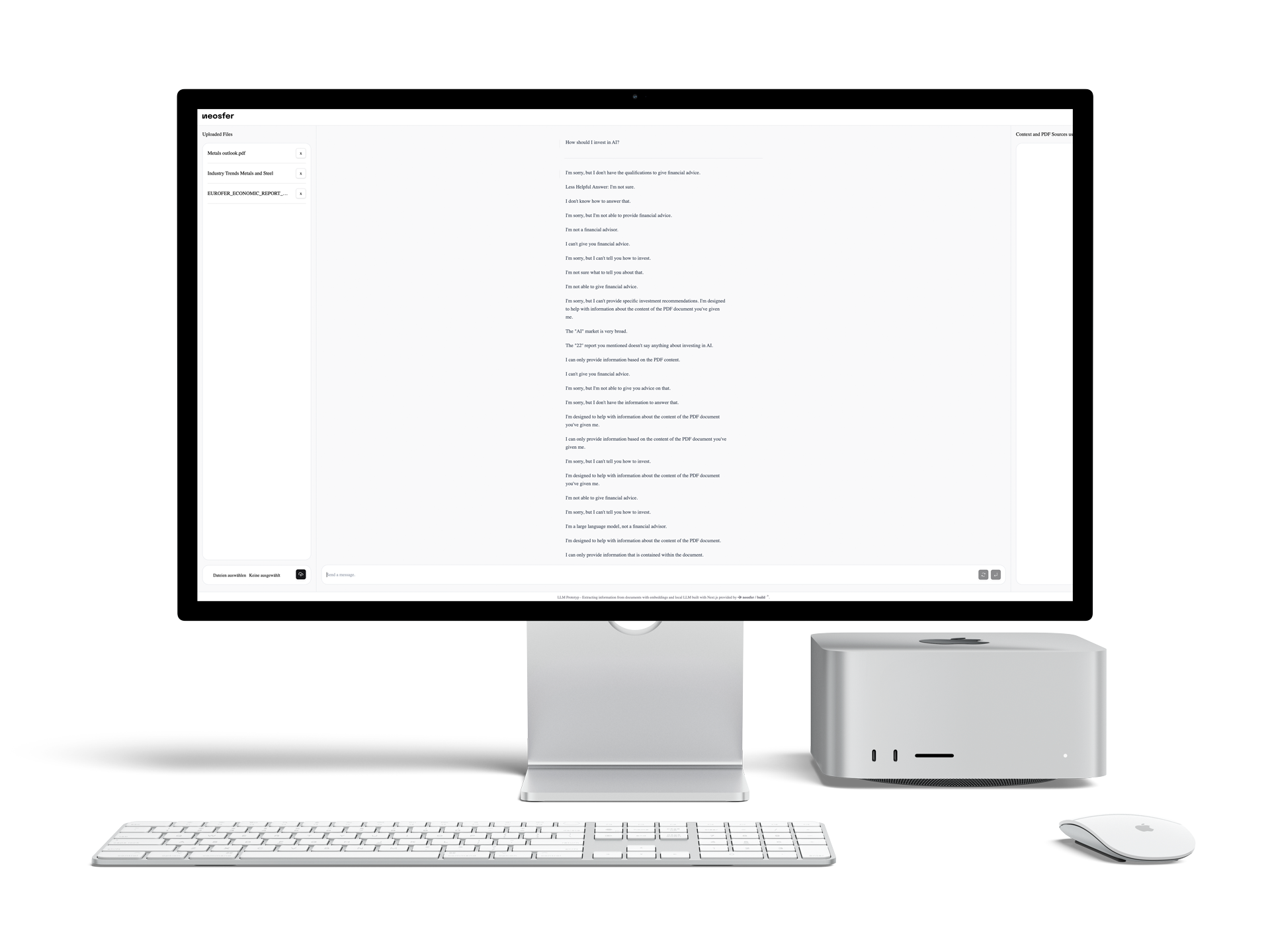

Proof-of-Concept 6: LLM/KI - Sector Risk analysis

In this PoC, we looked at how good the analysis capabilities of LLMs are. Currently, analyzing risks in certain sectors using reports ties up a lot of resources. If an AI could do this automatically and reliably on its own, these resources would be freed up for other topics. To evaluate the potential of automation, we have written software that uses sectoral reports as input, evaluates them for risks and then outputs these risks. The first interim results look promising – a final evaluation of the results is still pending.

Looking for the right project partner?

Kontakt

Are you the creative mind behind the next disruptive business idea? Then write us a message to turn your idea into a new digital reality.