In our connected, digital world, artificial intelligence (AI) is no longer the stuff of science fiction. AI’s reach is far and wide, from intuitive chatbots handling customer queries to algorithms predicting market fluctuations. The promises of efficiency, productivity enhancements, and groundbreaking innovation are compelling.

But let’s take a moment and consider a less talked about aspect: the challenges and risks embedded within AI’s complex algorithms and processes. Think of AI as a multifaceted tool with enormous potential for both good and bad.

This article aims to guide you through the other side of AI, focusing on industries such as finance and creative arts, to name a few. Our journey isn’t about creating unnecessary fear or projecting a dark and dystopian future. Instead, we wish to engage in an honest conversation, backed by facts, about the potential hurdles and pitfalls that accompany AI’s many benefits.

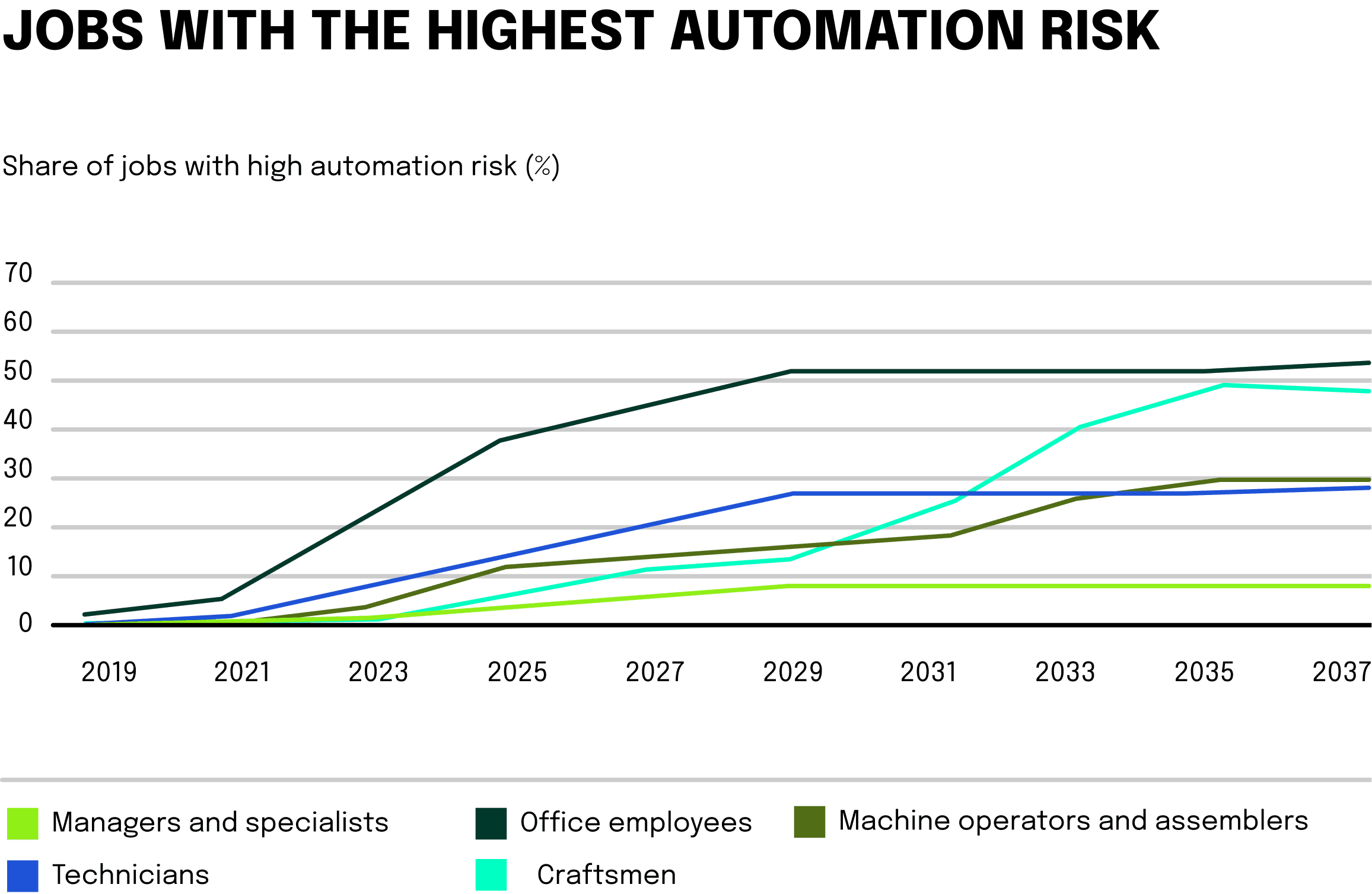

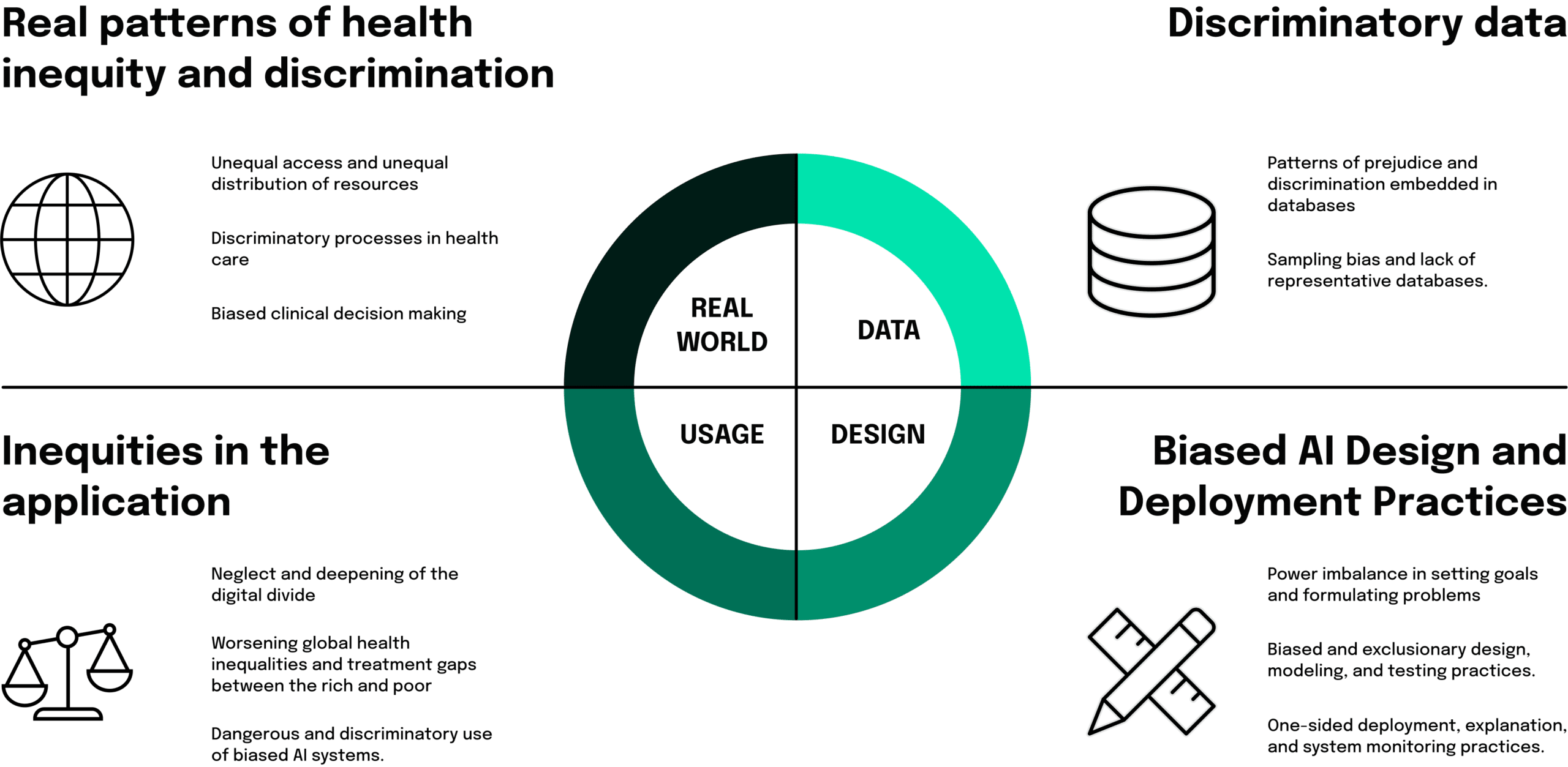

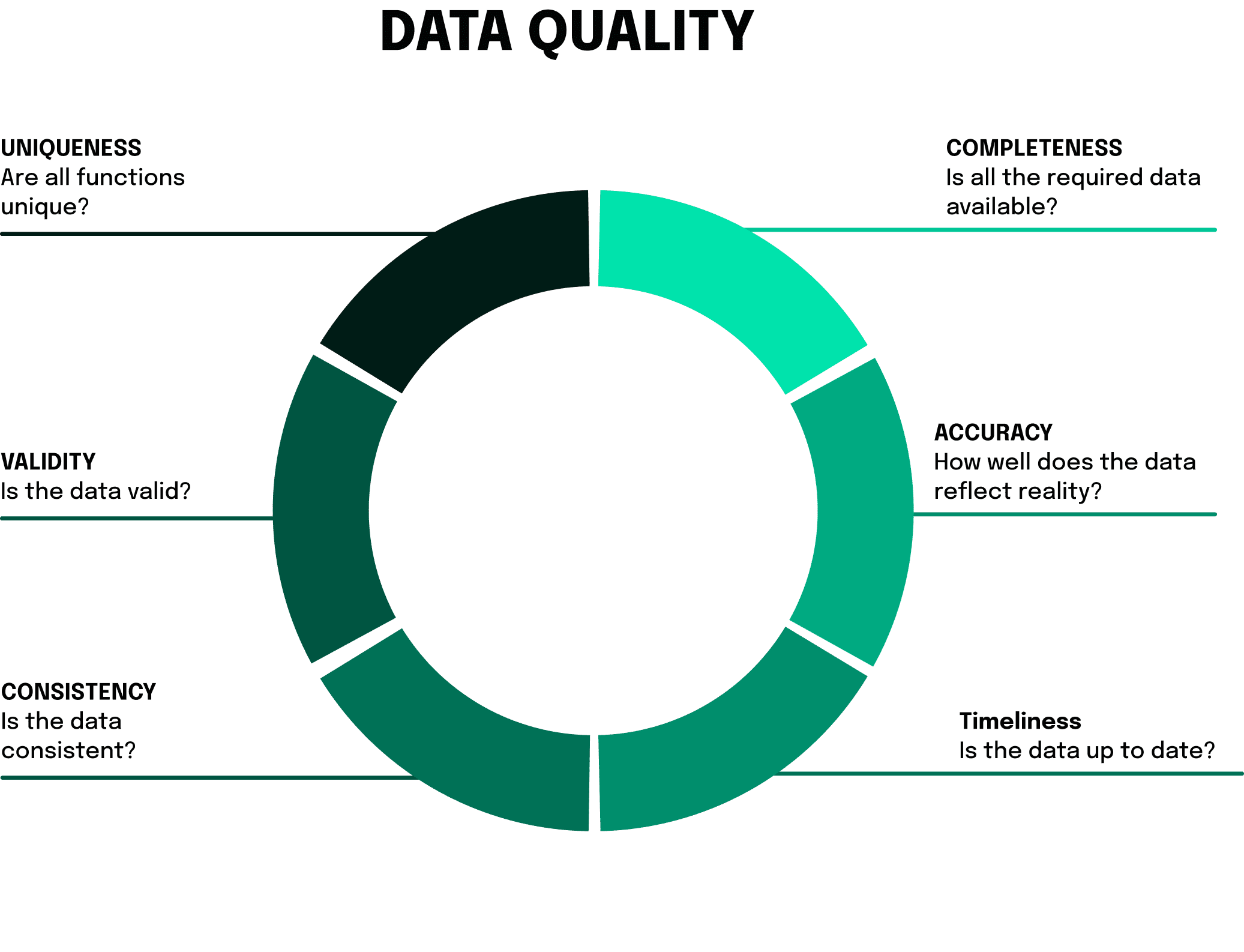

We’ll delve into specific examples within industries, shedding light on how AI might inadvertently affect the job market, pose threats to security, or even unintentionally magnify biases. Our mission here is to ignite a balanced and conscientious discourse on the role of AI in our professional lives. The final two sections provide insights into academic findings and how the generally hyped discussion about the positive impact of AI neglects important aspects of the implementation of these systems from a company’s perspective.

Don’t get us wrong; we’re not here to quash the excitement around AI or stifle its growth. We aim to equip you, whether you’re a creative entrepreneur, finance professional, or an interested observer, with a comprehensive understanding of AI’s multifaceted nature. AI’s promise is vast, but harnessing it requires care and consideration. As we walk through various sections of this article, we’ll strive to understand the potential negatives of AI, finding ways to recognize and perhaps even alleviate these challenges.