Adadi, A., & Berrada, M. (2018). Peeking Inside the Black-Box: A Survey on Explainable

Artificial Intelligence (XAI). IEEE Access, 6, 52138–52160.

https://doi.org/10.1109/access.2018.2870052

Bareis, J., & Katzenbach, C. (2021). Talking AI into Being: The Narratives and Imaginaries

of National AI Strategies and Their Performative Politics. Science, Technology,

&Amp; Human Values, 47(5), 855–881. https://doi.org/10.1177/01622439211030007

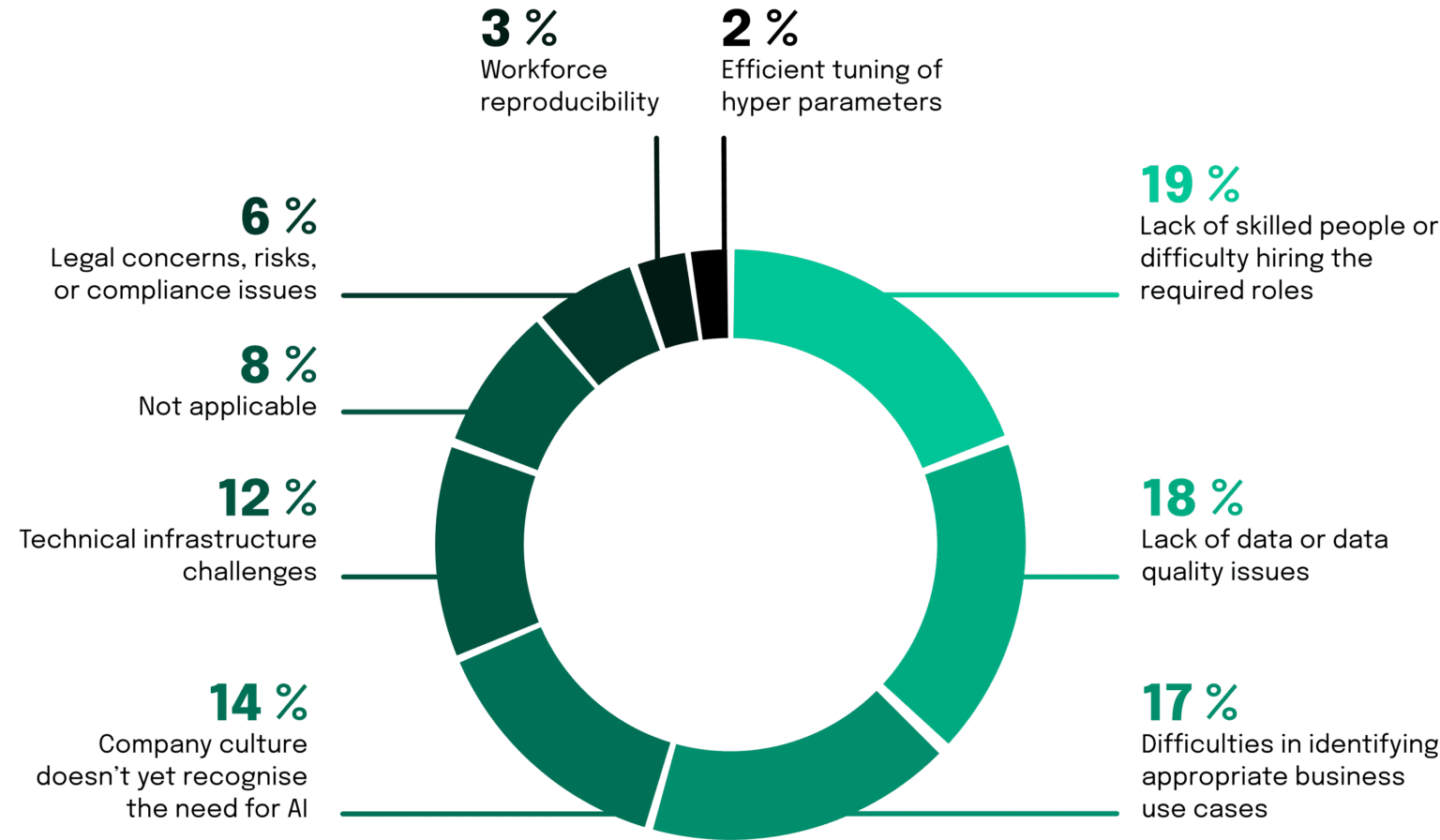

Cubric, M. (2020). Drivers, barriers and social considerations for AI adoption in business and

management: A tertiary study. Technology in Society, 62, 101257.

https://doi.org/10.1016/j.techsoc.2020.101257

Eitel-Porter, R. (2020). Beyond the promise: implementing ethical AI. AI And Ethics, 1(1),

73–80. https://doi.org/10.1007/s43681-020-00011-6

Floridi, L., & Cowls, J. (2019). A Unified Framework of Five Principles for AI in Society.

Issue 1. https://doi.org/10.1162/99608f92.8cd550d1

Floridi, L., Cowls, J., Beltrametti, M., Chatila, R., Chazerand, P., Dignum, V., Luetge, C.,

Madelin, R., Pagallo, U., Rossi, F., Schafer, B., Valcke, P., & Vayena, E. (2018).

AI4People—An Ethical Framework for a Good AI Society: Opportunities, Risks,

Principles, and Recommendations. Minds and Machines, 28(4), 689–707.

https://doi.org/10.1007/s11023-018-9482-5

Henry, K. E., Kornfield, R., Sridharan, A., Linton, R. C., Groh, C., Wang, T., Wu, A., Mutlu,

B., & Saria, S. (2022). Human–machine teaming is key to AI adoption: clinicians’

experiences with a deployed machine learning system. Npj Digital Medicine, 5(1).

https://doi.org/10.1038/s41746-022-00597-7

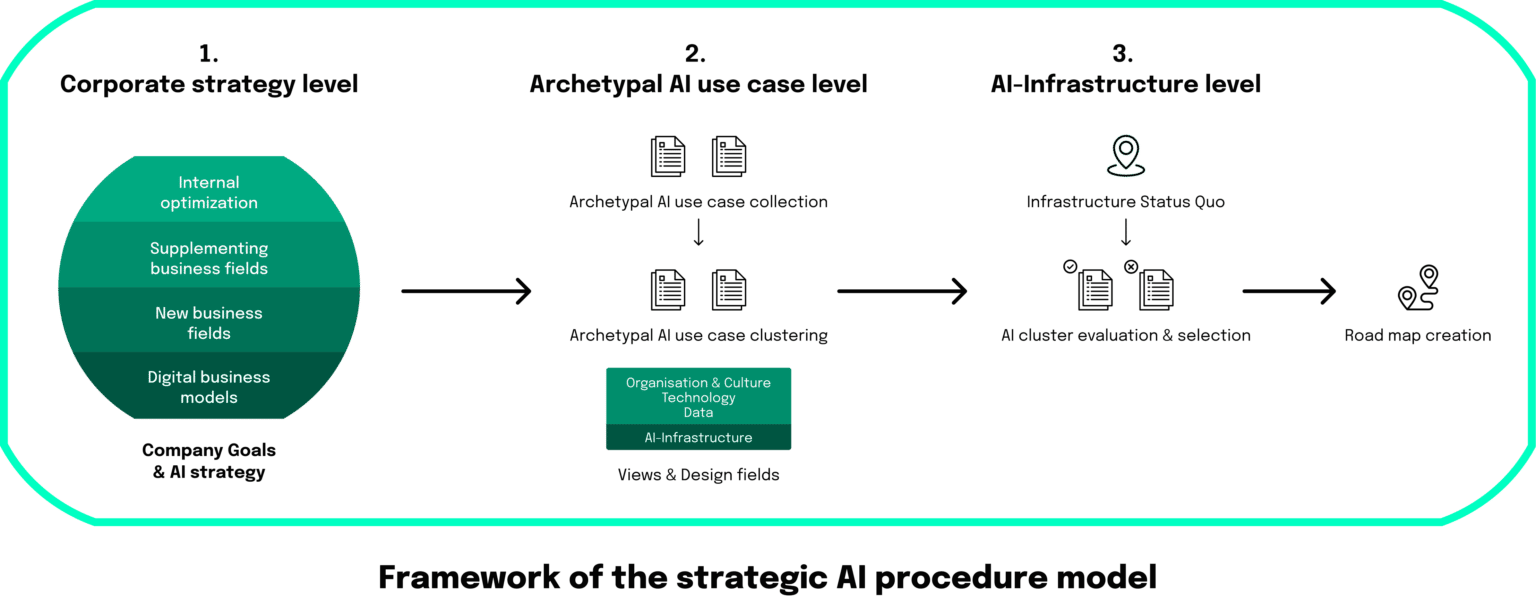

Kruhse-Lehtonen, U., & Hofmann, D. (2020). How to Define and Execute Your Data and AI

Strategy. Harvard Data Science Review. https://doi.org/10.1162/99608f92.a010feeb

Mittelstadt, B. (2019). Principles alone cannot guarantee ethical AI. Nature Machine

Intelligence, 1(11), 501–507. https://doi.org/10.1038/s42256-019-0114-4

O’Sullivan, S., Nevejans, N., Allen, C., Blyth, A., Leonard, S., Pagallo, U., Holzinger, K.,

Holzinger, A., Sajid, M. I., & Ashrafian, H. (2019). Legal, regulatory, and ethical

frameworks for development of standards in artificial intelligence (AI) and

autonomous robotic surgery. The International Journal of Medical Robotics and

Computer Assisted Surgery, 15(1), e1968. https://doi.org/10.1002/rcs.1968

Rodgers, P., & Levine, J. (2014). An investigation into 2048 AI strategies. 2014 IEEE

Conference on Computational Intelligence and Games.

https://doi.org/10.1109/cig.2014.6932920

Saha, S., Gan, Z., Cheng, L., Gao, J., Kafka, O. L., Xie, X., Li, H., Tajdari, M., Kim, H. A.,

& Liu, W. K. (2021). Hierarchical Deep Learning Neural Network (HiDeNN): An

artificial intelligence (AI) framework for computational science and engineering.

Computer Methods in Applied Mechanics and Engineering, 373, 113452.

https://doi.org/10.1016/j.cma.2020.113452

Sun, T. Q., & Medaglia, R. (2019). Mapping the challenges of Artificial Intelligence in the

public sector: Evidence from public healthcare. Government Information Quarterly,

36(2), 368–383. https://doi.org/10.1016/j.giq.2018.09.008